GPT-2 was introduced in 2019 and primarily built within the TensorFlow framework. Let's take a look at how we can reproduce GPT-2 with PyTorch while following the methodology outlined in the accompanying GPT-2 research paper.

Optimizing language models is an important task as organizations integrate generative AI capabilities into their business processes. Relying on manual prompt engineering introduces risk, biases, and other problems. Continue reading to learn how to mitigate these risks with DSPy.

Understanding our world often requires interpreting stimuli from multiple sources including through vision or text. LLaVA accomplishes this by combining a vision encoder with a large language model. Continue reading to learn how to leverage multimodal AI with Databricks and LLaVA! 🌋

For many AI-powered use cases, object detection emerges as a critical capability. Examples include driving cars, performing surgery, or identifying product defects. Continue reading to learn how to leverage Databricks and YOLOv8 to detect objects! 🤖

Generative AI is a compelling technology whether you are an artist looking for inspiration or a CEO looking to innovate. OpenAI captured the world's imagination after introducing ChatGPT - how can you get started with OpenAI's platform? Continue reading to learn more.

Deploying AI in the field challenges organizations to this day. Thanks to innovation, constraints such as limited tools, employee skills, and hardware specifications are becoming blockers of the past. Continue reading to learn more.

Data science, ML, and AI are changing organizations. What does this mean for consultants and clients? Continue reading to learn about my experience as a data scientist at McKinsey.

Folks have compared data to oil in the past, and while the metaphor may be tired, the process of building pipelines in both cases consumes valuable time and resources, and often results in a mess. Read more to learn about Kedro!

Ingesting, cleaning, and analyzing data on Databricks accelerates your data science and machine learning projects by leveraging the underlying data lake and Spark engine. Continue reading to get started with Databricks.

Hyperopt enables you to perform optimization in parallel over a defined search space to find the optimal parameters. Continue reading to get started with Hyperopt.

Using optimization methods requires structuring problems, identifying objectives, and imposing constraints given a problem context. Continue reading to learn how to solve optmization problems in MATLAB and GAMS.

Probabilistic thinking requires knowledge of probability distributions and the relevant statistics associated with each. Understanding these distributions can help you identify opportunities to leverage one. Continue reading to learn about the core concepts and essential distributions with examples in Python.

The SHAP framework unifies the methods used to interpret and explain machine learning models. This post helps interpret and explain SHAP. Read this post to start getting into SHAP (with both high-level explanation and python example).

Data sets contain noise but with high-powered or elegant data science, the relevant signal can be extracted. One key technique for analysis of real-world data (primarily focused on forecasting) is time-series analysis. A popular time-series forecasting procedure is Facebook's open-source Prophet procedure. Prophet is implemented in both R and Python.

Carnegie Mellon University's Heinz College offered a unique opportunity to jointly study public policy, management, and data analytics. The coursework covers topics ranging from machine learning, deep learning, econometrics, and optimization to organizational design and decision-making. Continue reading to learn why I am glad I enrolled.

While seemingly a trivial task, classifying recipes into cuisines and understanding how to interpret clustering and classification results can help you creatively answer other questions. Continue reading to learn how.

Cyber attacks are becoming more common as more data are stored in digital form. Suspicious, and likely malicious, users are requesting access to unauthorized resources while hoping for vulnerable networks or systems. Continue reading to learn how to apply descriptive analytics to uncover who is generating these suspicious requests.

Neural networks are a growing area of research and are being applied to new problems every day. The classic examples are image classification, facial recognition, and self-driving cars. The future is uncertain, but there is a high probability that neural networks in some form will play a critical role in shaping it. Continue reading to learn about the core concepts and walk through an example in Python.

Data science is a broad and deepening field but one divisive question still remains. In this post, I compare and contrast Python and R for use in different data science tasks. Continue reading to learn which one emerges triumphant.

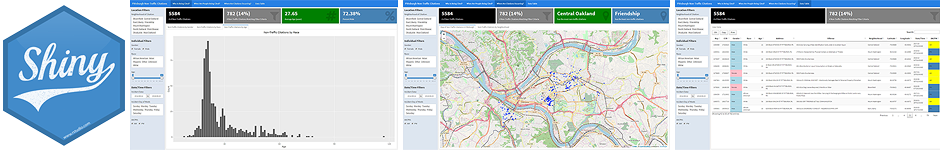

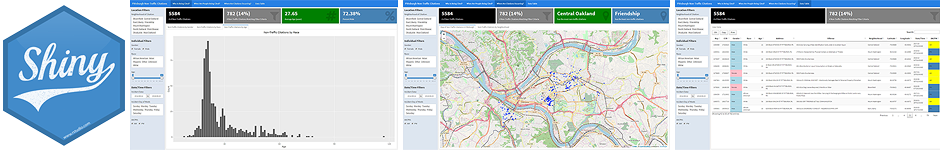

Shiny dashboards provide a simple and fast way to analyze and visualize data. Whether performing exploratory data analysis or building a robust tool for your client's executives, Shiny dashboards aid the data science process. Continue reading to walk through an example of constructing an R Shiny Dashboard.

Data science can change how any organization operates not just Facebook or Amazon, but even the American Civil Liberties Union - an organization 10 times older than Facebook. Continue reading to learn about my experience interning with the ACLU's data science team in New York.

Around the United States, municipalities have turned to risk assessment instruments (RAIs) to help judges determine which individuals to release on bail and which ones to keep in custody. The risk assessment process varies based on the specific instrument used but many rely on criminal recidivism data sets. These data sets typically contain various demographic indicators (age, race, gender, etc.) and also criminal history (charges, juvenile record, etc.).

Broward County, Florida, has turned to the use of one of the most popular RAIs today: COMPAS or the Correctional Offender Management Profiling for Alternative Sanctions tool. COMPAS assesses individuals based on criminal history and social profiling to categorize an individual as low, medium, or high risk. This tool, however, was not developed using the Broward County data set which may lead to poor performing predictions for individuals from Broward County, Florida. In this post we construct an RAI, compare to COMPAS and discuss findings.

The issue of gender-income disparity is not new - it is nuanced. In order to answer these questions, we will rely on the National Longitudinal Survey of Youth, 1979 cohort, data set (abbreviated ‘NLSY79’). To draw strong conclusions, we must evaluate the data set provided - is it accurate, relevant, and useful for drawing statistical conclusions? Once we summarize the data, we can discuss methodology - how should we approach the data, what variables should we consider, what techniques are appropriate? Third, we openly discuss findings about the sampled individuals and attempt to infer relationships about the income difference (if any) between men and women in the larger population based on other factors. Lastly, we end with a discussion of the relevancy and signicance of our findings given the context of the survey data available and the methodology used.

Understanding the confusion matrix is an important step in statistics, machine learning, or any other field where predictions or classifications are common. The confusion matrix is a type of contingency table with two dimensions that reveal how well a predictive model performs when the outcomes are known. Additionally, when associated costs of incorrect positive and negative guesses differ, the trade-offs can be optimized. Do you know the difference between Sensitivity, Specificity, Recall, Precision, True Positive Rate, and Positive Predictive Value?

Read the story about how I replaced my old website (from 2009) with a new one that is more modern, features easy publishing, and requires low maintenance. Along the way, I include tips and tricks that will help you create your own website.