Hands-on with Edge AI

Edge AI emerges at the intersection of AI, microcontrollers, and process automation. Jump start your understanding of these topics and more!

Table of Contents

Why is there so much hype around edge AI (and AI in general, see ChatGPT) and the potential it has to bring? Ask yourself what if supply chains were more efficient and predictable, transporation networks were more safe and reliable, and energy systems were more secure and sustainable. Edge AI has the potential to radically improve all of these industries along with others thanks to advancements in AI technology, accessibility of tiny compute devices, and growing interest in making our planet better. Understanding edge AI is an important first step to generating value.

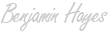

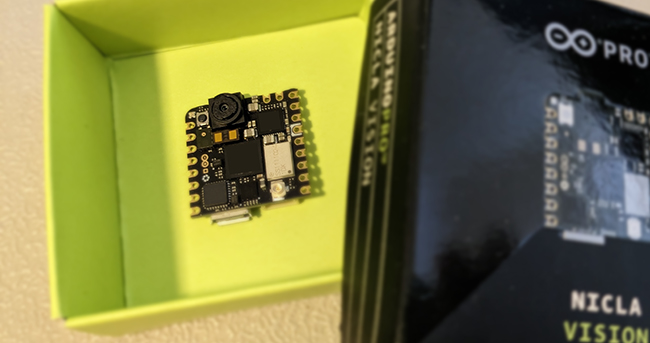

Nicla Vision will be used in the demo later. It measures less than 23x23mm.

What is Edge AI?

Folks in the field will give you different definitions of edge AI and mine won’t be the ultimate or conclusive one. By taking advantage of relatively cheap compute, low-power/field-ready devices, and AI algorithms, edge AI enables companies, governments, or individuals to deploy smart systems anywhere. But why take it from me? Here’s how one AI, ChatGPT, describes edge AI:

Edge AI refers to the deployment of artificial intelligence (AI) algorithms on edge devices, such as smartphones, cameras, sensors, and other embedded systems. By processing data locally on edge devices rather than sending it to a remote server, edge AI enables faster and more efficient real-time processing of data, as well as greater privacy and security of sensitive data.

Why is it important?

Many smart people tell you to answer “why” before “how” and that’s exactly what we need to do with edge AI. So you have AI applications running today or maybe you’re considering them? Why should you consider deploying to embedded or edge devices? Here’s why.

Common use cases include predictive maintenance, equipment efficiency, defect detection, fall detection, retail customer habits, automated retail checkout systems, visual security (verifying identity or checking if PPE is being worn)… and many more. Essentially, any repeatable decision in the field can be improved by applying AI. Value is generated by pushing decisions closer to real-time and minimizing the data processing needs. Additionally, the closer the use case is to the field, the easier it is to understand and maintain.

Another commonly overlooked advantage is the rising power consumption and cost of advanced computing (CPUs, GPUs). There’s a strong correlation between the performance of the best AI models today and their power consumption or draw. While the value per watt is still economical, this cost is something to consider.

How does it work?

Understanding how it works requires some knowledge of the fields that intersect and form edge AI: microcontrollers and AI. Let’s quickly recap these topics:

- AI and deep neural networks - If you’re familiar with this topic, just recall that these models typically require mountains of data and powerful hardware for both training and inferencing. Advances are changing that today. If you’re less familiar, check out my previous post on the topic.

- Microcontrollers - These low-power devices are becoming more common each year by offering flexibility and tiny form-factors. A significant portion of the community is guided in open-source channels which leads to some confusion when getting started.

Nicla Vision and MicroSD shown for scale.

In this demo, we will be detecting (classifying) whether or not a street parking space is available. While this is a toy example, the use cases mentioned above (defect detection, preventative maintenance, etc.) can all be solved for by repurposing this example.

Let's take a peek at the training data - take a look at this image showing 9 examples (out of ~400) of both a "free" (or available) and "taken" parking space. Red boxes indicate a parking space that has been taken by a car. Green boxes indicate a parking space that is free. You'll notice in a few examples there are trash cans, pedestrians, other parked cars, and even cars in motion. The keen observer you are may have also noticed variations in time of day, cloud cover in the background, and snow on the ground. Our model must be able to account for these scenarios.

Each image is 120x68 but has been increased 200% for effect.

Tools

We'll be using a trio of tools to accomplish this parking detection use case.

- Nicla Vision - A device equipped with a camera, microphone, motion sensor, distance sensor, and Wi-Fi and Bluetooth Low Energy connectivity

- Edge Impulse - A platform to help developers create, train, and deploy models for edge devices

- OpenMV IDE - An IDE that bridges your workstation and the Nicla Vision (among other devices)

It's alive!

Process

As with most AI/ML problems, there will be a core training and evaluation step. However, with edge AI, there are extra considerations to take in to account. What problem are we solving? What type of device do we have/need - and what specs? What quantity and quality of data do we have/need? How do we deploy and maintain the solution? Understanding these questions at the start will get you off on the right foot. Here's an over-generalized, simplified approach for this type of solution:

- Step 0: Understand the problem - What’s the value? Who benefits? Who cares? How much uplift can we expect?

- Step 1: Frame the problem in terms of AI/ML and consider edge constraints

- Step 2: Define your data and data needs

- Step 3: Model and Evaluate (train, test, metrics, etc.)

- Step 4: Deploy and Maintain - Do you need to minify the model to run on your microcontroller?

In the parking detection case, the problem is a toy one - so step 0 doesn’t carry much weight. The problem is image classification/detection which will require labeled training data. The training data is supplied by me (approximately 400 120x68 pixel images, though a “good enough” model was trained using about 200 images during testing). We’ll be using Edge Impulse to train and quantize the model. We’ll leverage OpenMV to deploy the model on the Nicla Vision.

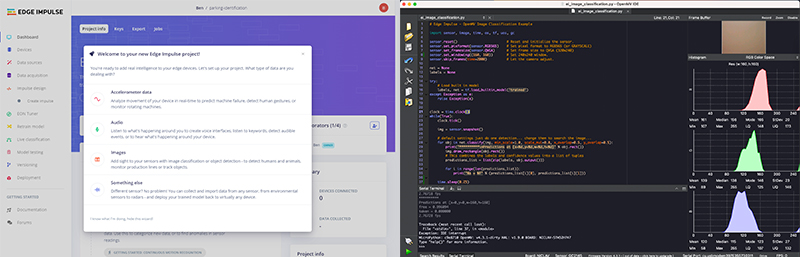

Left: Edge Impulse; Right: OpenMV IDE

Code

The code running on the Nicla device is quite short (less than 100 lines) but relies on tools in the micropython ecosystem. The process is essentially only 3 parts:

- Import minimal amounts of resources (sensor, image, time, etc)

- Load the trained model

- Loop and process sensor data with the model

This code can be extended to perform more robust checks on the image, to aggregate the data over time, or to report back to a centralized platform. You can even integrate with Twilio to send a text message when an event occurs. In this example, knowing a car has left the parking space could be valuable when planning where to park.

1# Edge Impulse - OpenMV Image Classification Example

2

3import sensor, image, time, os, tf, uos, gc

4

5sensor.reset() # Reset and initialize the sensor.

6sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

7sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

8sensor.set_windowing((160, 160)) # Set 240x240 window.

9sensor.skip_frames(time=2000) # Let the camera adjust.

10

11net = None

12labels = None

13

14try:

15 # Load built in model

16 labels, net = tf.load_builtin_model('trained')

17except Exception as e:

18 raise Exception(e)

19

20

21clock = time.clock()

22while(True):

23 clock.tick()

24

25 img = sensor.snapshot()

26

27 # default settings just do one detection... change them to search the image...

28 for obj in net.classify(img, min_scale=1.0, scale_mul=0.8, x_overlap=0.5, y_overlap=0.5):

29 print("**********\nPredictions at [x=%d,y=%d,w=%d,h=%d]" % obj.rect())

30 img.draw_rectangle(obj.rect())

31 # This combines the labels and confidence values into a list of tuples

32 predictions_list = list(zip(labels, obj.output()))

33

34 for i in range(len(predictions_list)):

35 print("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))

36

37 time.sleep(0.25)

38

39 print(clock.fps(), "fps")Results

When running the model connected to OpenMV, you can see in the top right corner of the image below, a pedestrian walking in the lower portion of the frame. This pedestrian did not trigger any “sense” of the parking space being taken. Obviously more evaluation would be required but that is a good sign that the model is robust and not overly susceptible to noise (pedestrians, waste management bins, etc.). In the bottom-left portion of the screen capture, you’ll notice the weight given to “free” and “taken” labels in real-time. These values are intentionally slowed down using time.sleep() to avoid flooding the terminal.

Unfortunately no car arrived during testing but the predictions are still accurate (space is free).

Just to recap, we reviewed edge AI as a concept, discussed the potential value, and demonstrated how a simple device/sensor in the field can be taught to use powerful tools like artificial intelligence. We used a Nicla Vision board equipped with a 2MP color camera to detect whether a parking spot is free or taken. Of course, this use case is a toy example that only affects me, but with a little imagination, this approach can be extended to use cases including preventative maintenance on critical equipment or infrastructure affecting millions of people.

Considerations and Next Steps

This space is new but maturing. Next steps for the model include building pipelines to make the training data set more robust and large (filled with plenty of examples not already included), and developing best practices around deployment strategy to make this approach durable, repeatable, reliable. Next steps for edge AI are understanding the potential value, the limitations, and what the edge, IoT, and AI spaces will bring in the near future. Start thinking through how edge AI can help unlock value for you, your organization, and your community.

Additional Resources

- Arduino Store - Nicla Vision

- Edge Impulse - Arduino Nicla Vision Overview

- Edge Impulse: Adding sight to your sensors

- Getting started with Nicla Vision

- NVIDIA Blog: What is Edge AI?